Know Everything about Big Data Hadoop before you Join the Training

Category: Hadoop Posted:Jan 24, 2017 By: Robert

Due to the vast development of new technologies, devices the amount of data or information produced by humankind is growing very quickly every year, it is important to ensure that the data is getting saved in a proper way for the future use.

A research says that the amount of data produced by us from the emergence of time till 2003 was five billion gigabytes which would fill the whole football field if you bundle up the data in the form of disks, similar amount of data was generated in every two days in 2011, and in every 10 minutes in 2013. The rate at which it is growing is enormous.

What is Hadoop?

Hadoop is an Apache open-source framework written in java that allows distributed processing of large datasets across clusters of computers using simple programming models. A Hadoop framework application works in an environment that provides distributed storage and computation across clusters of computers. Hadoop is designed to maximize from single server to 1000s of machines, each machine allowing local computation and storage.

Hadoop was introduced in the year 2006 to help the distribution for Nutch search engine by Doug Cutting and Mike Cafarella the two computer scientists who were inspired by the concept of Google’s MapReduce, a software framework in which an application is broken down into numerous small parts, which is said to be called as fragments or blocks, these individual blocks can be run on any node in the cluster.

In other words, the unstructured data as in log files, feeds, media files, and data from the internet in general is becoming more and more applicable to businesses. Every day a large amount of unstructured data is getting deposited into our machines. The big challenge is not to store large data sets in our systems but to retrieve and analyze this kind of big data in the organizations.

Hadoop is a framework that has the ability to store and analyze data available in different machines at different locations quickly and in a very cost effective manner.

Learn Big Data Hadoop from Industry Experts

What are the Key Features of Hadoop?

Because of the ability of distributed processing, Hadoop can manage very large volumes of structured and unstructured data more efficiently than the traditional enterprise data warehouse.

Features one has to know are mentioned as below:

- Flexibility in Data Processing

One of the huge challenges that organizations had in past been the task of managing unstructured data. It is said that only 20% of data in any company or organization is structured while the rest is all unstructured whose value has been ignored to a great extent due to lack of technology to analyse it.

Hadoop handles, structured, unstructured, encoded, coded, formatted, or any other type of data. Hadoop makes it possible that an unstructured data can be useful in decision making process.

- Easily Scalable

It is one of the important feature of Hadoop since it is an open-source platform and runs on company-standard hardware which makes Hadoop scalable platform to a very great degree where nodes can be easily added in the system as and data volume of processing needs grow without altering anything in the existing systems or programs.

- Fault Tolerant

Hadoop stores the data in HDFS where the data automatically duplicates at two other locations. So, even if one or two of the systems collapse, the file is still available on the third system which brings a high level of fault tolerance.

- Great at Faster Data Processing

It takes hours, days, or even weeks to load large amounts of data in traditional ETL and batch processes, which resulting in the need to analyse the data in real-time is becoming critical day after day.

This drawback is easily resolved using Hadoop where in which; it is amazing at high-volume batch processing because of its capability to do parallel processing. It can perform processes 10 times faster than on a single server or on the mainframe.

- Ecosystem is Robust

Hadoop has a very strong ecosystem that is well suited to meet the analytical needs of developers and small to large companies or organizations. Hadoop Ecosystem comes with a suite of tools and technologies making it very much suitable to deliver to a variety of data processing needs.

To name a few, Hadoop ecosystem comes with projects such as MapReduce, Hive, HBase, Zookeeper, HCatalog, Apache Pig etc. and many new tools and technologies are being added to the ecosystem as the market grows.

- Very Cost Effective

It generates cost benefits by bringing massively parallel computing to commodity servers, resulting in a substantial reduction in the cost per terabyte of storage, which in turn makes it reasonable to model all your data.

It was developed to help Internet-based companies that deal with prodigious volumes of data. According to some analysts, the cost of a Hadoop data management system, including hardware, software, and other expenses, comes around $1,000 a terabyte–about one-fifth to one-twentieth the cost of other data management technologies.

Preparing for Hadoop Interview? Here are a Few Predictable Questions

Benefits of Hadoop in the Industries

- Security and Risk Management

Nowadays, as the fraud and security issues are becoming more frequent and sophisticated, usual security solutions just are not up to the challenge of reliably protecting the company assets. Hadoop, on the other hand, can help the organization which analyses large amounts and different types of data in real time, accelerate the speed of threat analysis, and improve ability to assess risk by using sophisticated machine learning models.

- Operational Intelligence

In order to remain competitive it is very much necessary to be looking for ways to increase the productivity and profitability of the organization, Using Hadoop it is possible to achieve the above as Cisco provides infrastructure and analytics to support Hadoop distributions such as MapR, and this type of joint solution can give you the ability to evaluate data at the speed your business demands.

- Marketing Optimization

In an effort to make customer understand better you are drowning in data by seeing the growing number of social media channels which customers are using.

This sis where Hadoop comes in to picture where it can be used to cost-effectively integrate and analyse your disparate data in order to gain great customer insights, developed personalized real-time customer relationships, and increase revenue.

- Enterprise data Hub

Hadoop in the industries can be used vas cost-effective data hub (EDH) to store, transform, filter, analyse, cleanse and acquire new value from all kinds of data.

Selecting the right technology in three key areas viz infrastructure, the data processing platform and a foundational system to drive EDH applications helps in building a successful EDH.

How Big Companies Takes Advantage of Hadoop

Salary Trends

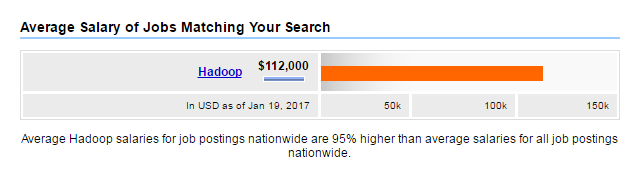

Professionals who are looking for a richly rewarded career, Hadoop Big Data technology help them to attain their goals. As companies organizations struggle to make a mark of their big data, they are willing to pay the premium packages for competent big data professionals.

Big Data Hadoop technology has paid increasing dividends since it burst business consciousness and wide enterprise adoption.

By considering the current Hadoop technology talent crunch, organization is willing to spend as much as they can, to hire professionals with enterprise in Hadoop technology.

According to the stats provided by indeed.com an average salary for a Hadoop Developer is $112,000.

Below image shows the same.

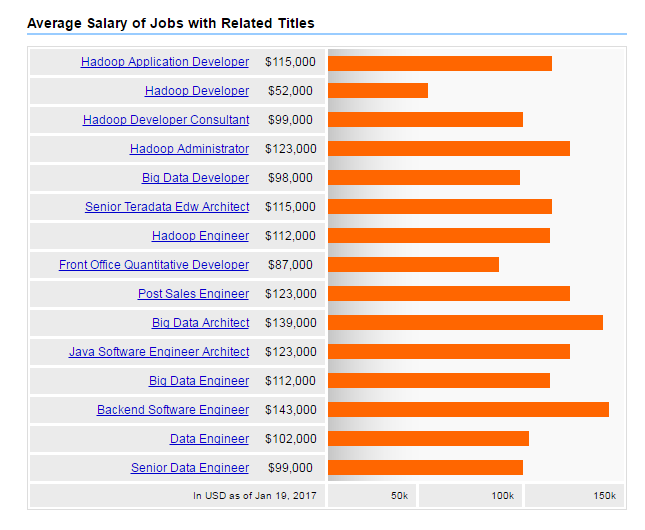

The other Hadoop related jobs and their average salary is as shown below.

How to become a Certified Hadoop Developer

Job Market

There is a strong competition in the IT industry for big data jobs; hence professionals need to ensure that they are best suited for the job with the help of proper weapon (Hadoop, NoSQL, and Spark) to fight the battle.

As per the stats provided by the Indeed.com there are more than 6000 Hadoop jobs postings in the world in which over 60% of the Hadoop jobs are in USA and out of the 100 listed jobs, there are minimum 50 vacant positions, by considering the different skill set an individual has to take up the job.

By analyzing the usual trend of Hadoop job postings in the US, the average salary of Hadoop developer will be around $110,000 which is 95% higher than the average salaries for all job postings nationwide.

It is also said that highest Hadoop salary package is gained by Hadoop administrator with salary of $123,000.

Stats also reveal that there were nearly 500 opens jobs for Big Data Hadoop developers in San Francisco, California and 450+ open jobs in Startups for Big Data Hadoop engineers around Bay Area, California in 2015.

Big Data Hadoop engineers expect a 9.3 percent boost in initial pay in 2017, with average salaries ranging from “$119.250 to 168,250”.

In spite of the fact that salaries offered by two companies are reasonable the second company might give more pay for the hire, due to its bigger impact in big data development using Hadoop technology. Majority of Big Data Hadoop jobs have an extremely varying Hadoop jobs salary component as there is always a company willing to outbid.

Want to know more about Hadoop? Register now for Free live Webinar

99999999 (Toll Free)

99999999 (Toll Free)  +91 9999999

+91 9999999