Why is Python essential for Apache Spark and Scala Training?

Category: Apache spark and scala Posted:Mar 14, 2017 By: Serena Josh

While Big Data experts have continued to realize the advantages of Scala for Spark and Python for Spark over the standard JVMs, there has been a lot of discussion on the importance of Python in relation to Apache Spark and Scala.

Selecting a programming language for Apache Spark is a subjective issue because of the reasons, why a particular Data Scientist or a Data Analyst will choose Python or Scala for Apache Spark, might not always be applicable to others. Based on distinct use cases or the specific kind of big data application to be developed – Data experts decide on which language will be a better fit for Apache Spark programming. It is useful for a data scientist to learn Scala, Python, R, and Java for programming in Spark and choose the preferred language based on the efficiency of the functional solutions to tasks. We can look into how Python is essential for Apache Spark and Scala training.

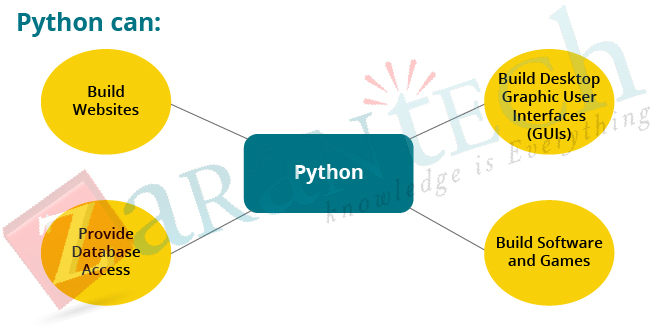

Apache Spark framework has APIs for Data Analysis and processing in multiple languages like Python, Scala and Python. Since Java does not support Read-Evaluate-Print-Loop (REPL) and the other two do, let’s discuss Python and Scala in the context of Apache Spark.

Learn Apache Spark with Scala from Industry Experts

Scala and Python are both easy for program and aid data experts in getting productive at an accelerated rate. Data scientists most often prefer to learn both Scala for Spark and Python for Spark but Python is usually the second favorite language for Apache Spark, as Scala was there first.

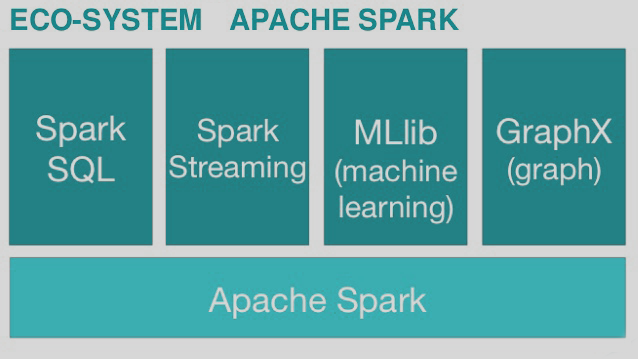

Apache Spark is a distributed computation framework that simplifies and accelerates the data crunching and analytics workflow for data scientists and engineers working over large datasets. It provides a unified interface for prototyping as well as building production quality applications which make it specifically suitable for an agile approach. Spark is forecasted to be the next big name in Big Data framework for Machine Learning and Data Science.

When a Data science team wants to adopt Spark, the choice of programming between Python and Scala will be an essential topic of discussion. While Python is the scientific language and Scala is an engineering language seen as a better replacement for Java.

Since that the two languages comparison has already been evaluated in details in other places, I would like to restrict the comparison to the particular use case of building data products leveraging Apache Spark in an agile workflow.

There are six key features that make Python an essential skillset for associated with Apache Spark and Scala training:

- Productivity

Coding close to the bare metal produce (or coding without an Operating System) always brings the most optimized results. But when pre-mature optimizations are applied at this stage they bring up a lot of issues. This is especially true in the initial MVP phase where the coding team pushes for maximum productivity by aiming at minimizing the lines of code while being guided by a smart IDE.

Being simple to learn and highly productive as a language, Python enables swift execution of coding tasks from day one of development. Scala requires slightly more thinking and abstraction because of its high-level functional features. But gaining familiarity with Scala will swiftly boost efficiency and productivity.

The concise nature of code in Scala and Python is comparable where concision is dependent on the skill possessed by the coder. In Python, users get a view of the code-execution on a step by step basis with the user knowing the state of each variable. Scala focuses more on the description of user intent, in terms of the final result that the user is trying to achieve, while not displaying the execution and implementation.

When it comes to IDEs, PyCharm and Intellij are efficient and productive environments. Scala has the unique ability to benefit from the type and compile-time cross-references that are able to provide bonus functionalities organically and without much ambiguity very much unlike scripting languages.

Register for Apache Spark with Scala Live Webinar

An example of such functionalities is find class/methods by the name in the project and the linked dependencies, auto-completion based on type compatibility, development-time errors/warnings, fin usages and a whole lot more. But it has to be remembered that all these features consume considerable memory and CPU resources.

Simple intuitive logic based apps would need Python for development and if the app in question is fairly complex in nature then it is advisable to take up Scala for coding for the app

- Safe Refactoring

This need is posed by the use of agile methodology, wherein rapid prototyping is commonplace. Users will need to safely alter the needs of code as data explorations are executed with adjustments made at iterations. Users commonly begin with writing code that is associated with logic.

This requirement mainly comes with the agile methodology; we want to safely change the requirements of our code as we perform data explorations and adjust them at each iteration. Very commonly you first write some code with associated with logic.

Both the two languages need tests such as integration tests, unit tests, property based tests and a whole lot more to apply safe refactoring. Scala is a compile language which has a better advantage in safe refactoring as compared to Python.

- Spark Integration

A lion’s share of the time and resources is generally spent on loading, cleaning and transforming the data along with information extraction.

Therefore, for this task, it is best to express your domain specific logic as a mix of functions and not concern oneself with the execution. This is the reason Big Data is highly functional.

As a user one will have to leverage various Spark APIs in order make code scalable and highly optimized. And this is similar to both Scala and Python. Code Execution performance is significantly good compared to competitors for Python. Scala supports Spark natively and this comes in handy when executing low-level tuning, debugging and optimizations. It is worthy of noting that the Spark framework has a lot of serialization exceptions.

It can be concluded that Scala better when comes to engineering, and is equivalent in terms of Spark integration and functionalities.

- Out-of-the-box machine learning/statistics packages

This feature really depends on what the size of your data is. Users choose Python every time that it can fit in memory but the users choose depending on the requirements of the project, such as if it just a prototype or it is something is needed to be deployed/maintained in a production system. Python offers a complete selection of already-implemented packages that can satisfy every need. Scala will only provide the basics but in the case of purely being production oriented, Scala is a better engineering choice.

- Documentation / Community

Both Scala and Python have good and comparable communities in terms of software development. But, when we regard data science community and cool data science projects, Python is the top pick, bar none.

- Interactive Exploratory Analysis and built-in visualization tools

An example for this is the iPython tool which supports various kernels. If a user considers opening a web-based notebook and starting to write and interact with code, iPython and iScala are really similar.

In terms of Built-in visualizations, Spark Notebook includes a very rudimental built-in viz library, a simple but acceptable WISP library and few wrappers around javascript technologies such as D3, Rickshaw. Python’s design facilitates better integration with a spark.

Here are two factors to help data scientists and analysts decide the importance of Python in the context of Scala and Apache Spark:

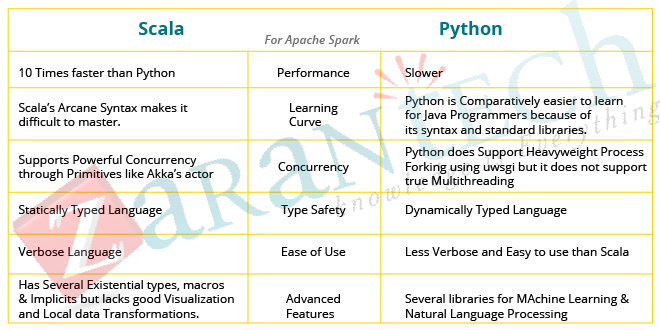

Scala vs Python – Learning Curve

Scala language has several syntactic sugars (syntax in a programming language which is designed to make things easier to read and express) while programming with Apache Spark, so big data professionals need to be extra careful when learning Scala for Spark. Programmers find the syntax of Scala for programming in Spark extensive, and fewer libraries in Scala makes it slightly challenging to define random symbolic operators that can be applied by inexperienced programmers. The payoff in Scala of course is, while using Scala, it is a sophisticated language with flexible syntax when compared to Java or Python. There is a rising demand for Scala developers because big data companies value developers who can master a productive and robust programming language for data analysis and processing in Apache Spark.

Python is relatively easier to learn for Java programmers because of its syntax and standard libraries. Learning Scala adds to a programmer’s knowledge of different novel abstractions in the type system, and functional programming aspects and immutable data.

Scala vs Python – Ease of Use

Scala and Python languages are both expressive in the context of Spark so by using Scala or Python the desired functionality can be achieved. Either way the programmer generates Spark content or calls functions on that. Python is a highly user-friendly language than Scala. Python is relatively less verbose making it easy for developers to write scripts in Python for Spark. Ease of use is a subjective factor because it comes down to the personal preference of the programmer, but by and large developers across the world find Python to have a much higher ease of use factor.

Conclusion

It can be concluded that Scala is faster and moderately easy to use, while Python is slower but very easy to use. Since Apache Spark framework is written in Scala, mastering Scala programming language helps big data developers dig into the source code with ease, if something does not function as expected. Utilizing Python increases the probability for user interaction and satisfaction. Using Scala for Spark provides access to the latest features of the Spark framework as they are first available in Scala and then ported to Python.

Deciding on Scala vs Python for Spark depends on the features that best fit the project needs as each one has its own pros and cons. Before choosing a language for programming with Apache Spark it is necessary that developers learn Scala and Python to familiarize with their features. Having learned both Python and Scala, it should be pretty easy to make a decision on when to use Scala for Spark and when to use Python for Spark. While the choice of programming language in Apache Spark purely depends on the problem to solve in the project, there is no doubt regarding the paramount importance that Python holds when it comes to Apache Spark and Scala training.

You may also like to read: Importance of Apache Spark and Scala in Big Data Industry

99999999 (Toll Free)

99999999 (Toll Free)  +91 9999999

+91 9999999